Unlocking knowledge at Utrecht University

Michelle Boon

Collaboratively transforming the Catalogus Professorum into Linked Open Data

Introduction

The Utrecht University Library (UBU) has embarked on a journey to explore Linked Open Data—a way of making information accessible, reusable and connected—and the UBU Hackathon 2024 was our first hands-on experiment. Over the course of three days, my group and I got our hands dirty with data through learning by doing and collaborating with experts from across the Netherlands.

For our test case we chose the Catalogus Professorum. This collection contains detailed records of Utrecht University’s professors from its founding to the present day. It’s a treasure trove of historical information that sheds light on academic careers, research contributions and the university’s broader role in society. By digitizing and linking this data, we aim to make it searchable and reusable for researchers, students and anyone curious about the university’s history.

Currently the data is accessible via a standard website that doesn’t conform to the strictures of Linked Open Data. This means that we can’t currently ask it to answer questions such as “Where were all the professors educated?”, nor have it create a timeline of all the university’s professors through history. However, Wikibase’s SPARQL endpoint does allow us to ask complex questions about the data, an exciting possibility that gave rise to the hackathon.

We welcomed participants with little to no prior experience, who received hands-on guidance in correcting errors in the data and adding missing information.

Hackathon highlights

The hackathon placed a strong emphasis on learning by doing. For instance, before the hackathon, I had never made a Wikibase manifest (a kind of instruction file). Many of my coworkers had never before worked with either OpenRefine or Wikibase. The hackathon attracted a diverse group of participants that included librarians, researchers, curators and data experts; we could never have made it as far as we did without help from the Royal Library, the Dutch Cultural Heritage Agency, the Dutch Digital Heritage Network, the University Library Maastricht, Wikimedia Netherlands and Wikimedia Deutschland.

Besides the learning aspect, the concrete goals for the hackathon were to create a valid data model, a working manifest and a cleaned and enriched dataset, so that all of it could be uploaded to the Wikibase instance, which was created back in 2023 during a WMNL Hackathon.

We had three days of working in the pressure cooker that is a hackathon.

- Day 1: Designing a data model tailored to the dataset.

- Day 2: Writing and implementing a Wikibase manifest.

- Day 3: Data cleaning and enrichment using OpenRefine.

All sessions had some form of training followed by hands-on work. Days 1 and 2 were mainly aimed at experienced, data-oriented staff who spent their time designing a robust data model, then writing and implementing the manifest. These sessions required a solid understanding of data structures and linked data principles.

However, day 3 had a much lower barrier to entry; we changed the focus entirely to training. On the third day we welcomed participants with little to no prior experience, who received hands-on guidance in correcting errors in the data and adding missing information, such as the geo-coordinates of professors’ birthplaces. For this work we used OpenRefine, shifting our emphasis onto building practical skills and empowering a broader group to engage with Linked Open Data workflows.

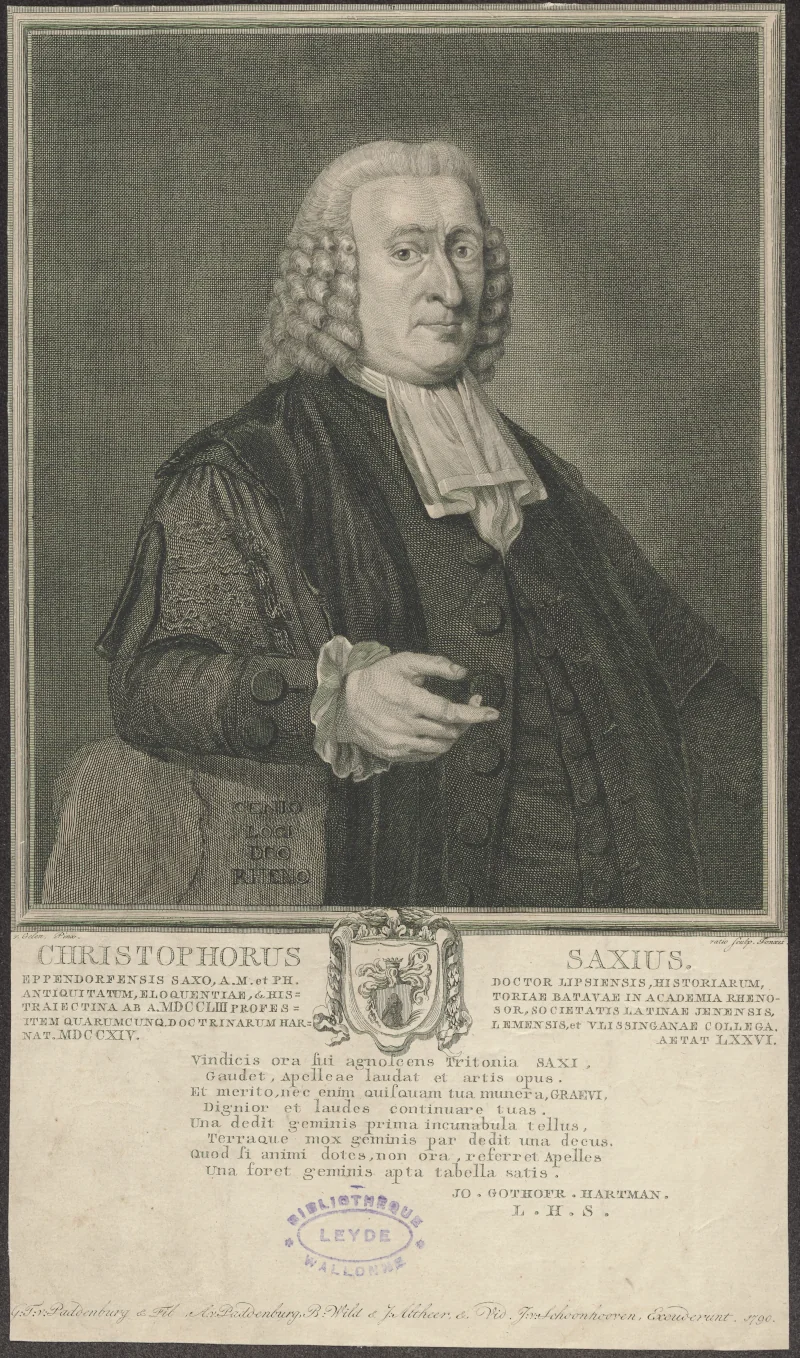

CC BY 4.0via Wikimedia Commons)

Challenges and lessons learned

When working with Linked Open Data, there’s always more to learn, but during this work we learned plenty—the importance of setting clear data cleaning rules, designing a valid data model and identifying opportunities to enrich data with additional context.

There was one more thing we learned during the hackathon: how to upload our cleaned data to Wikibase. Initially, we thought creating the aforementioned manifest would suffice. But we discovered on the final day that we also needed a reconciliation service—a tool that matches our data with existing data in Wikibase. Unfortunately, we didn’t have time to set this up during the hackathon, but it’s now a priority for the next phase of the project.

Outcomes

What did we achieve? We successfully developed a data model for the the Catalogus Professorum in Wikibase. We cleaned and enriched a specific set of data and created a manifest. In addition, many of my coworkers got hands-on experience with Linked Open Data. They realized how much we need to discuss beforehand about making rules for cleaning data. From a post-hackathon evaluation I learned that my coworkers very much valued getting that hands-on experience, not to mention working and networking with people from other institutions.

Thus, although we don’t yet have a fully working Wikibase, we absolutely reached our learning goals! This was our top priority, and it made this hackathon a success.

The road ahead

There are a few things left for us to do before we have a fully working Wikibase. One is to set up the reconciliation service, after which we can upload the first dataset and immediately start querying the data.

We also plan to redesign the Catalogus Professorum website, which could lead to running the site with Wikibase. Finally, we’re developing a plan along with several other university libraries to unify several of these catalogues. All the lessons we learned will prove useful in this project.

In closing

Before you start linking data, you’ve got to link up with people and their skills. This hackathon brought together professionals with a wide variety of skills, ranging from data modelers to curators and registrators. The hackathon’s collaborative spirit fostered creativity, problem-solving and a shared commitment to creating open, interconnected knowledge for all.

Our success wouldn’t have been possible without the enthusiasm and dedication of everyone involved in the hackathon. A heartfelt thanks goes out to all the participants for their energy and creativity, to the trainers for sharing their expertise, and to the partners and organizations who supported this initiative: the Royal Library, Dutch Cultural Heritage Agency, Dutch Digital Heritage Network, University Library Maastricht, Wikimedia Netherlands and Wikimedia Deutschland.

Together, we’ve taken a significant step toward making the Catalogus Professorum a valuable resource in the digital age. We look forward to continuing our journey toward open, linked knowledge.

Michelle Boon is a Metadata Specialist Linked Open Data (LOD) at the University Library Utrecht. With years of experience in the Wikimedia community, Michelle has contributed extensively to open-knowledge initiatives, including serving as a Wikipedian in Residence (WiR). Her work bridges cultural heritage and digital innovation, helping organizations unlock the potential of their collections through LOD. Michelle is passionate about making knowledge more accessible, reusable and interconnected for researchers, students and the public alike.

No comments yet

Leave a comment